Deep Learning Projects (Summer ‘22)

Skills learned: Gained insights into the differences between AI, ML, and DL. Proficiency in TensorFlow and Keras & understanding of the Google Collaboratory workflow.

References used: Deep Learning Illustrated, TensorFlow 2.0 Complete Course

Explanation of AI, ML, and DL:

- Artificial Intelligence: Employs a set of rules

- Machine Learning: Generates rules for us using an algorithm

- Neural Networks (Deep Learning): Utilizes layered data representation in machine learning

Software and libraries used:

- TensorFlow: Free and open-source library for machine learning and AI.

- Keras: Open-source Python interface for artificial neural networks.

- Google Collaboratory: Allows combining executable code, text, images, and more in one document.

Practical application of skills:

Engaged in several small projects from the book "Deep Learning Illustrated" by forking a repository of Google Collaboratory notebooks authored by the book's creator.

Shallownet in Keras

Description:

An artificial nueral network is created with keras and TensorFlow. This network is used to predict handwritten digits from the MNIST Handwritten digits shown above. The network's architecture consists of an input layer for each 28x28 pixel image of a digit, a single hidden-layer consisting of 64 sigmoid nuerons, and an output layer of 10 softmax nuerons which contain the probabality that the digit is a certain value from 0 to 9.

Steps Taken:

1. Import Dependencies:

import keras

from keras.datasets import mnist

from keras.models import Sequential

from keras.layers import Dense

from keras.optimizers import SGD

from matplotlib import pyplot as plt

2. Load MNIST Data:

(X_train, y_train), (X_valid, y_valid) = mnist.load_data()

X_train.shape

y_train.shape

X_valid.shape

y_valid.shape

3. Reformat the Data:

# Flatten 2d image to 1d - Convert pixel integers to floats

X_train = X_train.reshape(60000, 784).astype('float32')

X_valid = X_valid.reshape(10000, 784).astype('float32')

# Convert integer labels to one-hot

X_train /= 255

X_valid /= 255

n_classes = 10

y_train = keras.utils.to_categorical(y_train, n_classes)

y_valid = keras.utils.to_categorical(y_valid, n_classes)

4. Architect the Network:

model = Sequential()

model.add(Dense(64, activation='sigmoid', input_shape=(784,)))

model.add(Dense(10, activation='softmax'))

model.compile(loss='mean_squared_error', optimizer=SGD(lr=0.01), metrics=['accuracy'])

5. Train the Network:

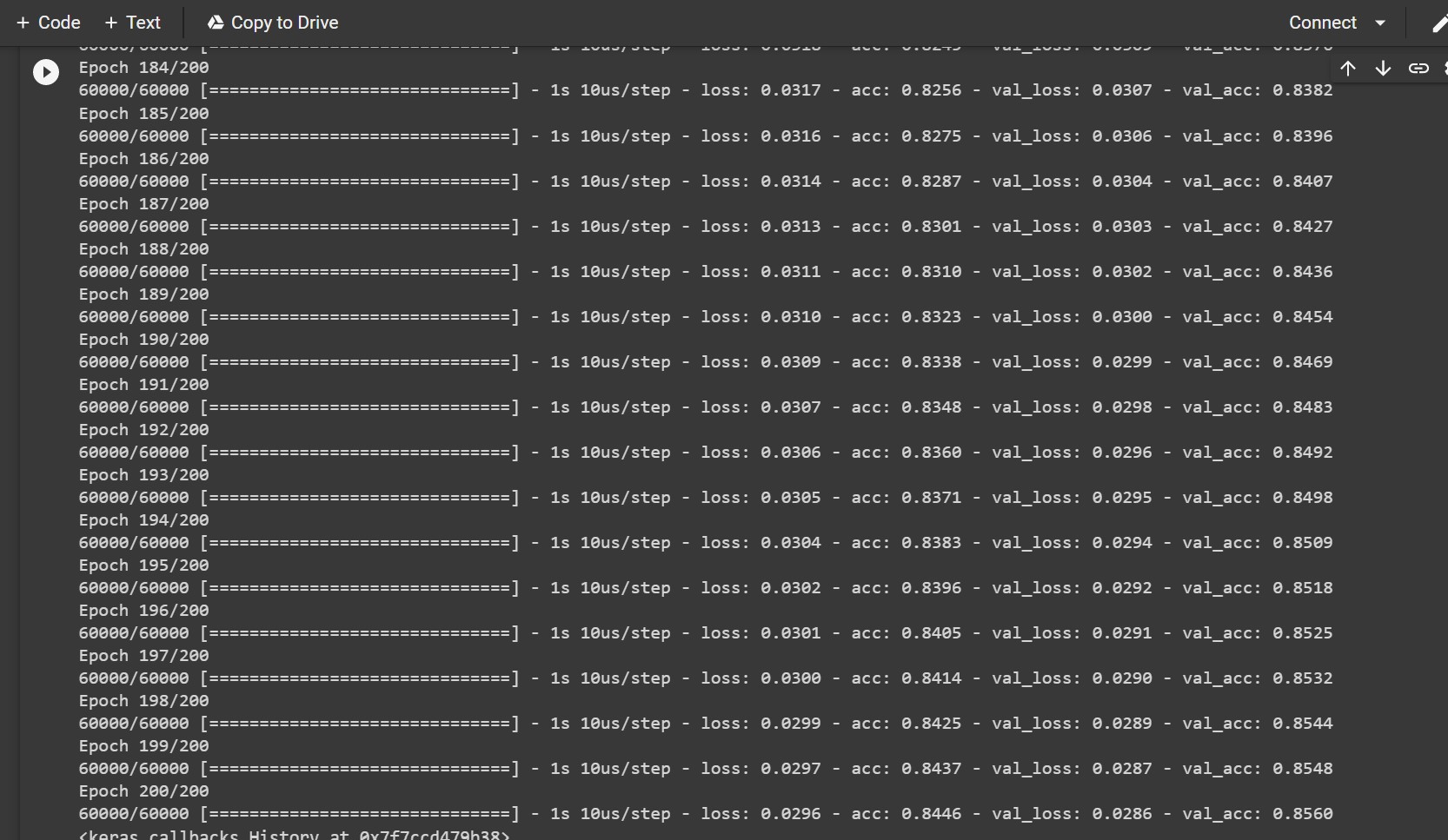

model.fit(X_train, y_train, batch_size=128, epochs=200, verbose=1, validation_data=(X_valid, y_valid))

Outcomes:

The network approaches 86% accuracy after 200 epochs of training as shown below